What Code Red' Means at OpenAI-and Why Now

Sam Altman didn't whisper. He hit the alarm. The Code Red' memo told teams to drop side projects and make ChatGPT faster, more reliable, more personal, and less overcautious. That includes tuning model behavior and cutting needless refusals for everyday tasks. The goal is simple, keep users happy while rivals push hard.

Why now? The leaderboard keeps rotating. Since GPT-3.5 in November 2022, we've seen rapid upgrades across the board. Multimodal, long context, tool use, memory features, and coding agents are table stakes. Gemini 3 leaped on reasoning benchmarks. Opus 4.5 won mindshare with coding. Grok 4 pushes real-time, fast iterations, and distribution through X.

Here's the part founders should care about. Model quality is volatile. That volatility hits your agents, your costs, and your roadmap. A bad week of latency or refusals can break user trust and wreck margins. Code Red' is OpenAI admitting the game is live, not settled.

Lens 1 - Revenue and Userbase: Momentum vs. Moats

Let's start with the money and the mouths to feed. OpenAI has massive consumer reach through ChatGPT and growing enterprise deals. Google has the deepest distribution in the industry with Search, Android, Chrome, and Workspace. Anthropic sells reliability and safety to companies that pay for uptime, steady quality, and sane defaults. xAI can bundle Grok across X subscriptions and lean on a constant firehose of real-time data.

OpenAI's near-term defensibility comes from sheer usage and product velocity. The enterprise moat is still forming, and that's where Google and Anthropic are dangerous. Google can inject Gemini into everything, which compresses acquisition costs and drives default usage. Anthropic is quietly loved by teams that want predictable behavior in production. xAI's distribution is different, but real, especially where news, creators, and live trends matter.

I'll be blunt. Distribution still wins. If you can land in default workflows, you win seats by inertia. That's Google's edge. But momentum also wins, and OpenAI still ships fast, with a rabid user base that forgives small misses if the product keeps improving week after week.

Lens 2 - Fundraising and Runway: Who Has the Longest Fuse?

War chests matter because training and inference costs do not get cheaper fast enough to offset ambition. Here's the lay of the land.

OpenAI

OpenAI keeps raising, keeps partnering, and keeps locking in compute. Hyperscaler deals are not just cash, they're access to training clusters, inference scaling, and favorable pricing. That buys time to iterate, retrain, and roll out new models while holding the consumer crown.

Anthropic

Anthropic closed mega-rounds and lined up multi-cloud alliances. Rumors of an IPO-ready posture are not random. It's a bet on long-term, safety-first revenue from enterprises that can't risk flaky agents. That customer type churns less and pays more for reliability.

Google/DeepMind

Google has, for all practical purposes, unlimited internal funding for AI. Add a TPU roadmap and in-house research, and you get real pricing power. If Google wants to compress margins to win distribution, it can. That's a problem for everyone else.

xAI

xAI raised big and has unique distribution through X. Tie-ins with hardware and other ecosystems are in play. The interesting angle is bundling: if Grok becomes a feature inside other high-usage products, it bypasses classic CAC and drives new data loops.

Who has the longest fuse? Google. Who has the most to lose if the consumer crown slips? OpenAI. Who has the cleanest enterprise story? Anthropic. Who is the wildcard for real-time and creator flows? xAI.

Lenses 3 & 4 - Community Vibes and Benchmarks on the Ground

Let's talk usage, not press releases. Builders keep telling me they reach for Opus 4.5 and Claude Code for tough coding tasks. Tool use feels crisp. Fewer weird refusals. Longer context holds up better across multi-step chains. GPT-5 has crazy high ceilings on complex reasoning and planning, but the early vibe was mixed because people expected a moonshot on day one. Welcome to hype cycles.

Latency matters. Agents live and die by time-to-first-token and tool round trips. When a model is 30 percent slower one week, your funnels feel it. Reliability is the silent killer. Nothing nukes trust like assistants stalling, looping, or hallucinating IDs in a CRM sync at 3 a.m.

Open source keeps the pressure on. Llama and Mistral push price cuts and faster parity for "basics" like RAG, long context, and structured tool output. That's great for operators because it holds vendors accountable. It's rough for margins, which is why you see aggressive bundling and tiering.

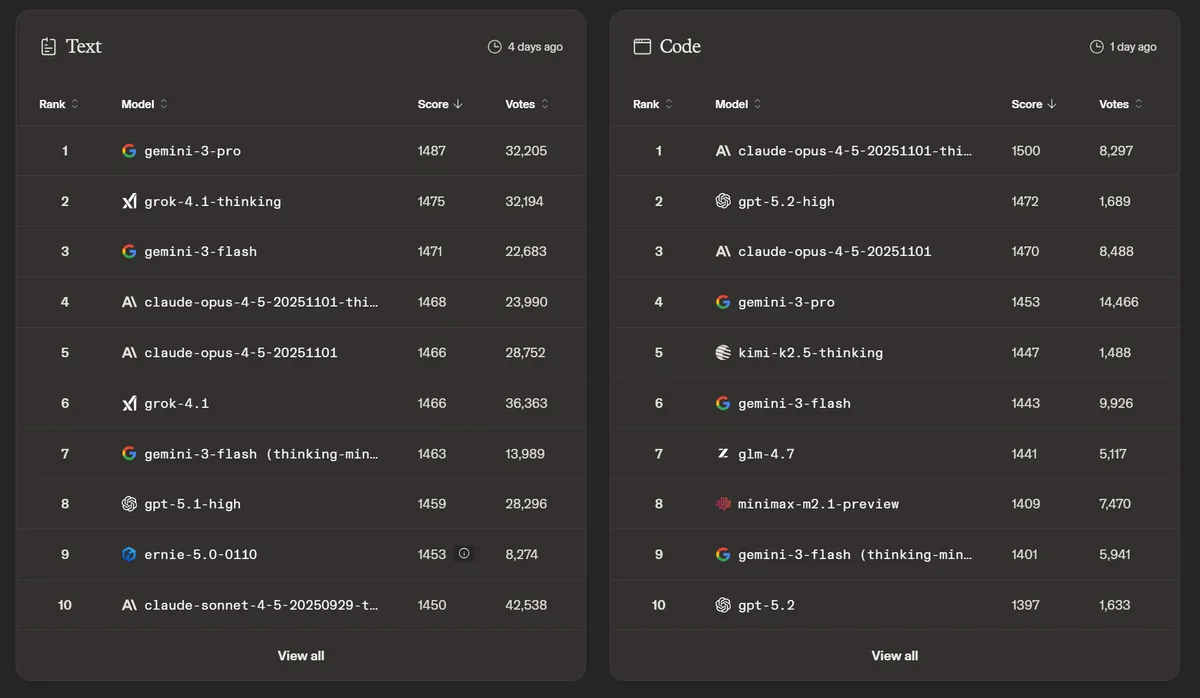

What about benchmarks? Public leaderboards are still useful as a pulse. They are not your production truth. Arena scores move with model updates and crowd votes. One week a model tops coding. Next week a new patch changes the order.

Want a live pulse on community voting? Check the Chatbot Arena leaderboard. It's helpful, just don't build your stack off Elo alone.

Model Face-off - GPT-5 vs. Gemini 3 vs. Grok 4 vs. Opus 4.5

Here's the blunt comparison for agentic work. Reasoning, coding, tools, context, latency, and pricing posture. I'm keeping prices as "posture" because vendor pricing changes fast. Treat this as a capability snapshot, not a forever truth.

| Feature | Tool A | Tool B | Tool C |

|---|---|---|---|

| Pricing | Premium tier | Aggressive enterprise bundling | Premium, usage-based |

| Key Feature | Deep reasoning and planning | Strong multimodal and search tie-ins | Top-tier coding and tool reliability |

Mapping the table to models: Tool A maps to GPT-5, Tool B to Gemini 3, and Tool C to Opus 4.5. Where does Grok 4 fit? Think speed, live distribution via X, and a bias toward real-time knowledge work and trending content flows. For long-horizon, multi-step agents, I'd still pilot Grok 4 alongside others and route per task type.

Strengths by workflow

- Reasoning and planning: GPT-5 and Gemini 3 lead on complex chains, with GPT-5 better at multi-agent orchestration in my testing.

- Coding: Opus 4.5 has the community momentum for coding agents. Strong tool use and fewer derails on long context chains.

- Multimodal: Gemini 3 leans into image and video understanding with tight integration across the Google stack.

- Tool-use fidelity: Opus 4.5 is consistent, GPT-5 is powerful with careful schema design, Gemini 3 shines when tools link to Google data sources.

- Latency: Grok 4 often feels snappy and good for real-time flows. GPT-5 and Opus 4.5 are steady, but tune temperature and tool timeouts to avoid loops.

Pros

- Choice creates leverage on price and features

- Task routing improves accuracy and speed

- Model diversity reduces outage risk

Cons

- More vendors means more contracts and keys

- Routing adds complexity and monitoring load

- Inconsistent updates can break prompts or tools

From GPT-3.5 to Now - Timeline of the LLM Leaderboard Shifts and What to Do Next

The top spot has traded hands more than once since late 2022. Here are the key milestones and the patterns they reveal.

- November 2022: GPT-3.5 hits the mainstream and kicks off the modern chatbot era.

- March 2023: GPT-4 launches with major jumps in reasoning and reliability.

- Early 2024: Claude 3 family lands, with Opus drawing attention for coding and long context.

- Mid 2024: GPT-4o focuses on multimodal speed and cost, pushing near real-time UX.

- 2025: Opus 4.5 strengthens coding agents and tool fidelity; developers flock to it.

- 2025: Grok 4 doubles down on speed and live data vibes through X distribution.

- 2025: Gemini 3 pushes benchmark highs and tight Google ecosystem integrations.

- 2026: GPT-5 ships with strong reasoning and planning, but mixed early expectations.

So what should operators actually do? Treat Code Red' as your cue to harden your stack.

- Decouple agent logic from models - Put prompts, tools, and routing rules behind your own interface. Swap models without changing app code.

- Adopt multi-model routing - Route by task: coding to Opus 4.5, deep reasoning to GPT-5, multimodal to Gemini 3, real-time flows to Grok 4.

- Instrument quality - Track cost per resolved task, first-token latency, tool success rate, and refusal rate for every model.

- Guardrails and retries - Use JSON schemas, function-specific prompts, and idempotent tool calls. Add backoff and provider failover.

- Budget for switching costs - Reserve time for prompt re-tuning and eval rebuilds when a provider updates a major model.

- SLA watch - Monitor rate limits and uptime. Keep a second provider warmed for surge and incident days.

- Leaders rotate, but your production metrics shouldn't

- Own your routing, logging, and evals, not just prompts

- Design for graceful degradation across multiple models

So, is Code Red' justified?

Yes. Gemini 3 pulled ahead on public scores, Opus 4.5 won coding mindshare, and Grok 4 is carving a real-time niche. Altman's memo is a signal: protect the core, fix refusals, tighten latency, and keep the consumer base happy. For the rest of us, the move is clear. Build multi-model from day one and make route decisions with live metrics, not vibes.

Why this matters for you

- If you're a startup, your edge is speed. Don't rebuild every time a vendor jumps. Abstract your model layer now.

- If you're an enterprise, you're buying stability. Ask vendors for real SLAs, rate-limit headroom, and eval transparency.

- If you're a product team, measure conversion impact from latency and refusals. Those two numbers pay for your model bill.

One last thought. Three years ago Google called its own code red' after ChatGPT. Now OpenAI is doing the same. The lesson is not who's up this week. The lesson is that the stack needs to bend without breaking when the leaderboard shifts again next month.

Sources and notes

Altman's Code Red' memo, the focus on personalization, speed, reliability, and fewer overrefusals, and the 800M weekly user stat are drawn from public reporting and summaries of internal notes. The public leaderboard snapshot offers a community pulse, not a production guarantee.