What is vibe coding? (Karpathy's definition, in plain English)

Here's the short version. Vibe coding is when you describe what you want in plain English, then let an AI model write the code. You guide with intent and taste. The model does the heavy thinking and typing.

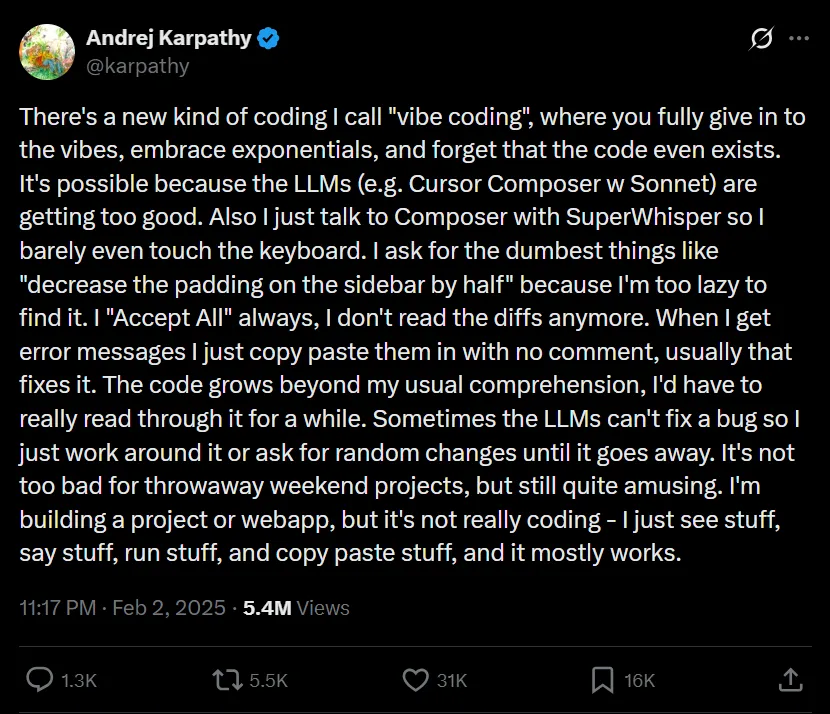

The term blew up after Andrej Karpathy posted about it in February 2025, saying you "give in to the vibes" and almost forget that the code exists, while the model does the coding. He showed prototypes like MenuGen where he set goals, shared examples, gave feedback, and let the LLM handle the rest. That's the vibe.

How it feels in practice: you type prompts like "build a Flask API with a /summarize route," paste a few examples, get a draft, run it, and then say "fix the 500 error and cache results." It's fast. The blank page is gone. You focus on intent and user experience, not syntax.

What vibe coding isn't: it's not a replacement for architecture, tests, security, or real product thinking. If you skip those, the speed you gained now becomes tech debt later.

"The hottest new programming language is English."- Andrej Karpathy

Why vibe coding feels amazing, and where it burns you

Why it's addictive

✅ Pros

- Rapid prototyping. You can go from idea to running code in an hour, not a week.

- No blank page pain. Natural-language prompts beat staring at a fresh repo.

- Creative leaps. Models often propose patterns or libraries you wouldn't think of.

❌ Cons

- Hallucinated APIs. The model "uses" methods that don't exist, then you chase errors.

- Leaky abstractions. It glues things together in ways that break under load or edge cases.

- Copy‑paste debt. You accept chunks you don't fully understand, and it piles up.

Common failure modes I keep seeing

- Silent failures. Happy-path demos pass, background jobs die quietly.

- Library roulette. The model flips between SDKs mid-session and leaves half-baked imports.

- Security gaps. No input validation, sloppy auth, keys in code, missing rate limits.

- Unclear ownership. Who "wrote" the module, and who fixes it next month?

Team impact is real too. Builds get hard to reproduce. People shove secrets into prompts. Nobody knows why a certain pattern was chosen. And when it breaks on Friday, everyone is learning the codebase from scratch at 3am. Not fun.

My better workflow: be the PM, not the passenger

I love the speed, but I don't hand steering to the AI. I act like a solid PM. I set goals, guardrails, acceptance tests, and a simple architecture. Then I let the model draft code. Here's the playbook I use.

Step 1, understand the stack you're about to use

- List APIs and SDKs you'll call. Include versions and links in the prompt.

- Write the data contracts. Show JSON input and output shapes.

- Define auth. OAuth, API keys, or JWT, and where secrets live.

- Note rate limits and quotas. Include retry or backoff rules.

- Draft acceptance tests. What has to pass for "done."

Step 2, write a tiny proof of concept for the riskiest bit

Don't build the full app yet. Build a focused POC for the hardest part in a fresh thread or repo. If the risky piece is "verify webhook signatures" or "streaming from a long-running task," do only that. When it works, paste the working code back to the model and say, "Use this exact snippet, it's tested."

Step 3, start less agentic, then scale up

I start in claude.ai. Chat-first. I can see the code and the reasoning, and I learn while it builds. Once the plan is solid and tests exist, I move to Claude Code for bigger, multi-file refactors and project-wide changes.

Step 4, add tests, linters, and a dumb-simple CI

- Unit tests: for core logic and risky edges. Make the model write them, you review.

- Linters/formatters: Prettier, ESLint, Black, Ruff. Enforce them in the repo.

- Minimal CI: run tests and lint on every PR. Fail early, fix fast.

Once that's in place, go fast. You set intent and constraints. The model fills in the code.

Tools that vibe (and when I use each)

I've rotated across claude.ai, Claude Code, GitHub Copilot, Cursor, and Replit Agents. They all help, just in different ways.

| Use case | claude.ai | Claude Code | Copilot / Cursor / Replit |

|---|---|---|---|

| Learn and plan in public | Great, shows thinking and code | Okay, but shines later | Inline only, limited context |

| Multi-file refactors | Manual paste and review | Excellent agentic edits | Cursor offers strong workflows |

| Inline speed while coding | Not inline | Not inline | Copilot is strongest here |

| Greenfield scaffold | Great for first draft | Great once spec exists | Cursor can scaffold projects |

| Control and learning | High transparency | Medium, powerful edits | High speed, less context |

My default path

- Start in claude.ai for clarity and control.

- Move to Claude Code when I need repo-wide edits or large refactors.

- Use Copilot or Cursor for inline fill-ins, tests, and quick boilerplate.

If you want a broader overview of agent tools beyond coding, check our AI agents guide and our curated list of the Best AI Agents.

Real-world prompts: vibe vs planned for the same feature

Example 1, Receive a webhook and call an API

Vibe prompt

Fast start, but missed signature checks and rate limits.

Build a small Express server that receives a POST webhook and forwards the data to the Acme API. Log results.

- Use JavaScript

- Any port is fine

- Just make it work fastSpec-led prompt

Fewer bugs. Clear acceptance tests.

Goal: Receive Acme webhooks and upsert to our DB.

Stack:

- Node 20, Express 4, pg@8

- Verify Acme-Signature using HMAC SHA-256 and ACME_SECRET

- Retry 429 with exponential backoff (100ms, 200ms, 400ms)

Data contract:

- Input JSON: { id: string, email: string, plan: "free"|"pro" }

- Output: 200 OK on success, 400 on bad signature

Acceptance tests:

- Valid signature returns 200 and DB upserted

- Invalid signature returns 400

- 429 from Acme retries up to 3 times then logs errorResult: The vibe version "worked" but accepted fake webhooks and crashed on 429. The spec-led version passed tests on the first run and took fewer re-prompts.

Example 2, Simple CRUD with auth

Vibe prompt

Make a Flask app with todo CRUD and login. Keep it simple and fast.POC-first prompt

We already have a working JWT login POC (below). Use it as-is.

Requirements:

- Flask 3, SQLAlchemy 2, SQLite

- /login returns JWT for valid user

- /todos CRUD requires Authorization: Bearer <token>

- Return 401 for missing/invalid tokens

- Add 3 unit tests for: login ok, create todo ok, create without token fails

Working snippet:

<paste minimal verified JWT issue/verify code>Result: The POC-first flow removed all auth edge case bugs and cut retries from 7 to 2. Tests caught a broken update path before it hit staging.

Track results like a hawk

- Time to first working build

- Number of re-prompts

- Test pass rate

- Production error count in first week

- Good specs slash retries and bugs.

- POCs de-risk the hardest parts early.

- Tests keep your speed from turning into tech debt.

Is vibe coding the future? Where it fits, and where it doesn't

Short answer, it's already here, and it's great for the right jobs. But pure vibes without structure will bite you.

Great fits

- Scaffolding new apps and screens

- Data wrangling, one-off scripts, migrations

- Adapters, SDK wrappers, and internal tools

- Exploratory prototypes and UI experiments

Don't do pure vibes here

- Auth, payments, PII handling, compliance

- Infra changes, Terraform, networking rules

- Anything that needs a formal threat model or audit trail

The hybrid future looks sane to me. Humans own the product intent, constraints, and reviews. Agents grind through boilerplate, repetitive edits, and scaffolding. That's not hype. That's how teams keep moving fast without waking up to a mess.

How I actually run a vibe project

- Scope it: Write the spec, or use the 1 Click Vibe Coding AI App Spec Generator.

- Proof it: Build a tiny POC for the scariest part in a clean thread.

- Draft it: Start in claude.ai for clarity, paste the POC, and set acceptance tests.

- Harden it: Add tests, linters, and minimal CI.

- Scale it: Use Claude Code, Cursor, or Copilot for multi-file edits and fast iteration.

Look, vibe coding is fun. I love the rush. But being the PM, not the passenger, is how you ship without regrets.

Sources and credit

Karpathy popularized "vibe coding" in February 2025 and showed how to steer builds in natural language. You can read his post here: Karpathy on vibe coding.